Description

There is an undirected tree with n nodes labeled from 1 to n, rooted at node 1. The tree is represented by a 2D integer array edges of length n - 1, where edges[i] = [ui, vi] indicates that there is an edge between nodes ui and vi.

Initially, all edges have a weight of 0. You must assign each edge a weight of either 1 or 2.

The cost of a path between any two nodes u and v is the total weight of all edges in the path connecting them.

Select any one node x at the maximum depth. Return the number of ways to assign edge weights in the path from node 1 to x such that its total cost is odd.

Since the answer may be large, return it modulo 109 + 7.

Note: Ignore all edges not in the path from node 1 to x.

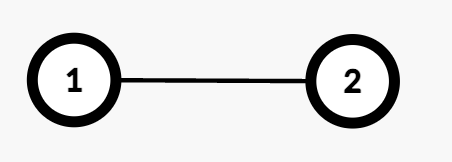

Example 1:

Input: edges = [[1,2]]

Output: 1

Explanation:

- The path from Node 1 to Node 2 consists of one edge (

1 → 2). - Assigning weight 1 makes the cost odd, while 2 makes it even. Thus, the number of valid assignments is 1.

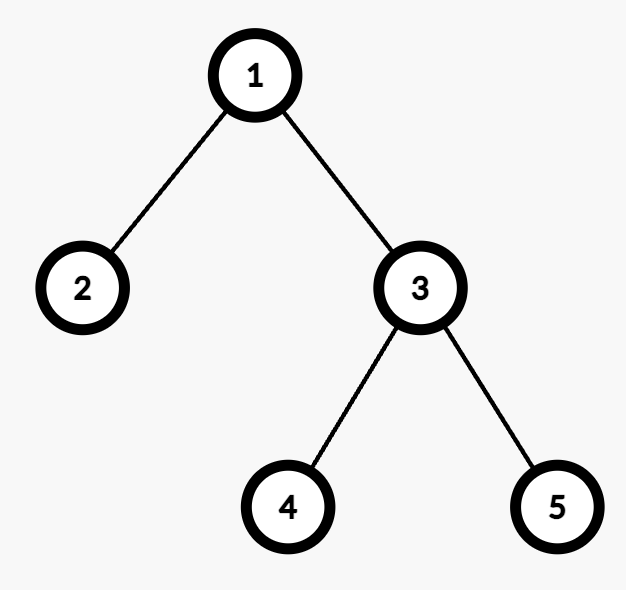

Example 2:

Input: edges = [[1,2],[1,3],[3,4],[3,5]]

Output: 2

Explanation:

- The maximum depth is 2, with nodes 4 and 5 at the same depth. Either node can be selected for processing.

- For example, the path from Node 1 to Node 4 consists of two edges (

1 → 3and3 → 4). - Assigning weights (1,2) or (2,1) results in an odd cost. Thus, the number of valid assignments is 2.

Constraints:

2 <= n <= 105edges.length == n - 1edges[i] == [ui, vi]1 <= ui, vi <= nedgesrepresents a valid tree.

Solution

Python3

class Solution:

def assignEdgeWeights(self, edges: List[List[int]]) -> int:

MOD = 10 ** 9 + 7

N = len(edges)+ 1

graph = defaultdict(list)

for u, v in edges:

u -= 1

v -= 1

graph[u].append(v)

graph[v].append(u)

depth = [0] * N

maxDepth = 0

def dfs(node, prev, d):

nonlocal maxDepth

depth[node] = d

maxDepth = max(maxDepth, depth[node])

for adj in graph[node]:

if adj != prev:

dfs(adj, node, d + 1)

dfs(0, -1, 0)

return pow(2, maxDepth - 1, MOD)